In this paper, we present a computer-vision based approach to detect eating. Specifically, our goal is to develop a wearable system that is effective and robust enough to automatically detect when people eat, and for how long. We collected video from a cap-mounted camera on 10 participants for about 55 hours in free-living conditions. We evaluated performance of eating detection with four different Convolutional Neural Network (CNN) models. The best model achieved accuracy 90.9% and F1 score 78.7% for eating detection with a 1-minute resolution. We also discuss the resources needed to deploy a 3D CNN model in wearable or mobile platforms, in terms of computation, memory, and power. We believe this paper is the first work to experiment with video-based (rather than image-based) eating detection in free-living scenarios.

To see more from the Auracle research group, check out our publications on Zotero.

Bi, Shengjie and Kotz, David, “Eating detection with a head-mounted video camera” (2021). Computer Science Technical Report TR2021-1002. Dartmouth College. https://digitalcommons.dartmouth.edu/cs_tr/384

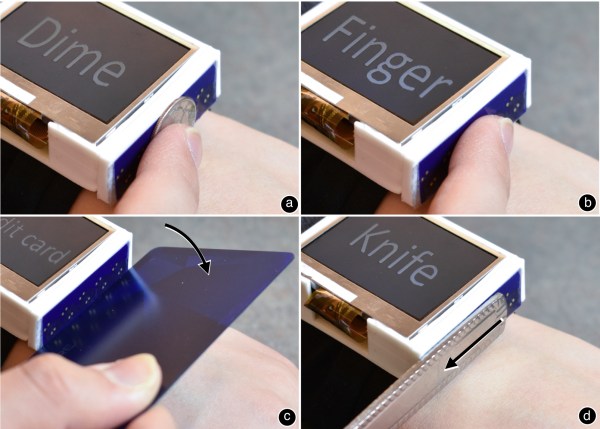

Abstract: We present Indutivo, a contact-based inductive sensing technique for contextual interactions. Our technique recognizes conductive objects (metallic primarily) that are commonly found in households and daily environments, as well as their individual movements when placed against the sensor. These movements include sliding, hinging, and rotation. We describe our sensing principle and how we designed the size, shape, and layout of our sensor coils to optimize sensitivity, sensing range, recognition and tracking accuracy. Through several studies, we also demonstrated the performance of our proposed sensing technique in environments with varying levels of noise and interference conditions. We conclude by presenting demo applications on a smartwatch, as well as insights and lessons we learned from our experience.

Abstract: We present Indutivo, a contact-based inductive sensing technique for contextual interactions. Our technique recognizes conductive objects (metallic primarily) that are commonly found in households and daily environments, as well as their individual movements when placed against the sensor. These movements include sliding, hinging, and rotation. We describe our sensing principle and how we designed the size, shape, and layout of our sensor coils to optimize sensitivity, sensing range, recognition and tracking accuracy. Through several studies, we also demonstrated the performance of our proposed sensing technique in environments with varying levels of noise and interference conditions. We conclude by presenting demo applications on a smartwatch, as well as insights and lessons we learned from our experience. Abstract: We present Pyro, a micro thumb-tip gesture recognition technique based on thermal infrared signals radiating from the fingers. Pyro uses a compact, low-power passive sensor, making it suitable for wearable and mobile applications. To demonstrate the feasibility of Pyro, we developed a self-contained prototype consisting of the infrared pyroelectric sensor, a custom sensing circuit, and software for signal processing and machine learning. A ten-participant user study yielded a 93.9% cross-validation accuracy and 84.9% leave-one-session-out accuracy on six thumb-tip gestures. Subsequent lab studies demonstrated Pyro’s robustness to varying light conditions, hand temperatures, and background motion. We conclude by discussing the insights we gained from this work and future research questions.

Abstract: We present Pyro, a micro thumb-tip gesture recognition technique based on thermal infrared signals radiating from the fingers. Pyro uses a compact, low-power passive sensor, making it suitable for wearable and mobile applications. To demonstrate the feasibility of Pyro, we developed a self-contained prototype consisting of the infrared pyroelectric sensor, a custom sensing circuit, and software for signal processing and machine learning. A ten-participant user study yielded a 93.9% cross-validation accuracy and 84.9% leave-one-session-out accuracy on six thumb-tip gestures. Subsequent lab studies demonstrated Pyro’s robustness to varying light conditions, hand temperatures, and background motion. We conclude by discussing the insights we gained from this work and future research questions.